Here’s How AI’s Real Potential Is Revealed and Why Radiologists Still Can’t Be Replaced

Abstract: A recent study, RadLE, highlights the limitations of AI in medical diagnostics, particularly in radiology, despite advancements in technology. The benchmark tested AI against human radiologists on 50 complex cases involving CT scans, MRIs, and X-rays. Board-certified radiologists achieved an accuracy of 83%, while trainees scored 45%, and the top AI model, GPT-5, only reached 30%. This study underscores that while AI can assist in detecting anomalies, it cannot match the nuanced judgment, experience, and contextual understanding of human radiologists, reinforcing that AI is not yet capable of replacing human expertise in critical diagnostic scenarios.

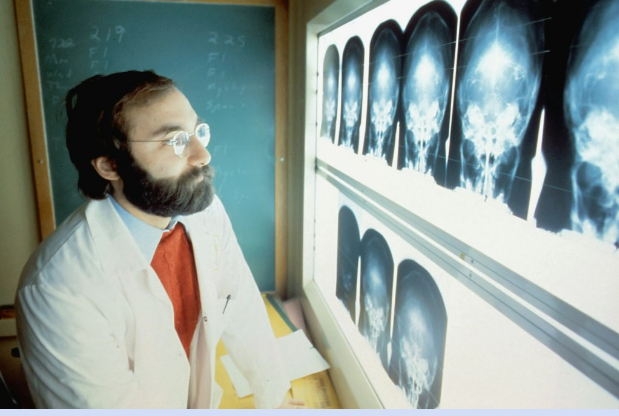

Artificial intelligence has been reshaping the world, from writing essays to generating realistic images and even composing music. Yet when it comes to medical diagnostics, the story is far more complex.

A recent study, the RadLE (Radiology’s Last Exam) benchmark, has revealed just how far even the most advanced AI models fall short in the face of human expertise.

RadLE, challenged AI and human experts with 50 highly complex radiology cases, including CT scans, MRIs, and X-rays.

These weren’t routine scans; they were intentionally selected to test the limits of diagnostic ability, with subtle and overlapping pathologies that require deep clinical experience to interpret.

“The results were striking: board-certified radiologists scored 83%, trainees 45%, and the most advanced AI, GPT-5, managed only 30%. Other AI models performed even worse, with some barely surpassing 1% accuracy.”

These findings are sobering, especially in a world where headlines often claim that AI will replace doctors.

RadLE demonstrates that while AI shows promise, it cannot match the judgment, experience, or contextual understanding of human radiologists.

AI in Radiology

Artificial intelligence has been quietly transforming radiology for over a decade.

Early models could detect straightforward anomalies, such as fractures or lung nodules, helping radiologists flag areas of concern and reducing human error.

Hospitals embraced these tools to speed up workflows and triage urgent cases.

The emergence of large language models (LLMs) like GPT-5 expanded expectations.

Suddenly, AI could interpret complex data, generate structured reports, and even provide reasoning about its findings. Media coverage suggested AI might soon outperform human clinicians.

“Yet images are not text. Radiology is a subtle art, a tiny shadow can indicate a life-threatening tumor in one patient but be harmless in another.”

It requires pattern recognition plus context, intuition, and clinical reasoning, skills AI has not yet mastered.

The RadLE Benchmark Test

RadLE was designed to be the ultimate test for AI in radiology. Unlike conventional benchmarks that use curated or simplified images, RadLE employed expert-level, high-stakes cases mimicking real-life diagnostic complexity.

Its purpose was to challenge AI in ways that matter clinically.

Test Cases

- 50 challenging cases: The benchmark included 50 radiology cases with CT scans, MRIs, and X-rays, deliberately chosen for difficulty.

- Complexity: Cases had overlapping pathologies, subtle anomalies, and variations that make misdiagnosis easy.

- Diversity: The selection reflected a broad spectrum of clinical conditions, ensuring AI couldn’t rely on simple pattern recognition.

Evaluation Methodology

- Blinded scoring: Human experts scored diagnostic accuracy without knowledge of the AI’s or other humans’ responses.

- Consistency checks: AI models were evaluated across multiple runs to assess reliability.

- Reasoning assessment: Models were tested across different reasoning modes to examine how they handle diagnostic questions.

- Error taxonomy: Researchers analyzed the AI’s mistakes to create a taxonomy of visual reasoning errors, a framework for understanding where AI fails.

Results: Humans vs. AI

The findings were unambiguous:

Even the most advanced AI fell well short of trainees and far behind experienced radiologists. These results emphasize that AI is not ready to replace humans in critical diagnostics.

Several factors contributed to AI’s poor performance:

Limited contextual understanding: Radiologists integrate patient history, symptoms, and prior scans. AI currently lacks this ability. Read More….

Leave a Reply